Peter Naur's legacy: Mental models in the age of AI coding

Table of contents

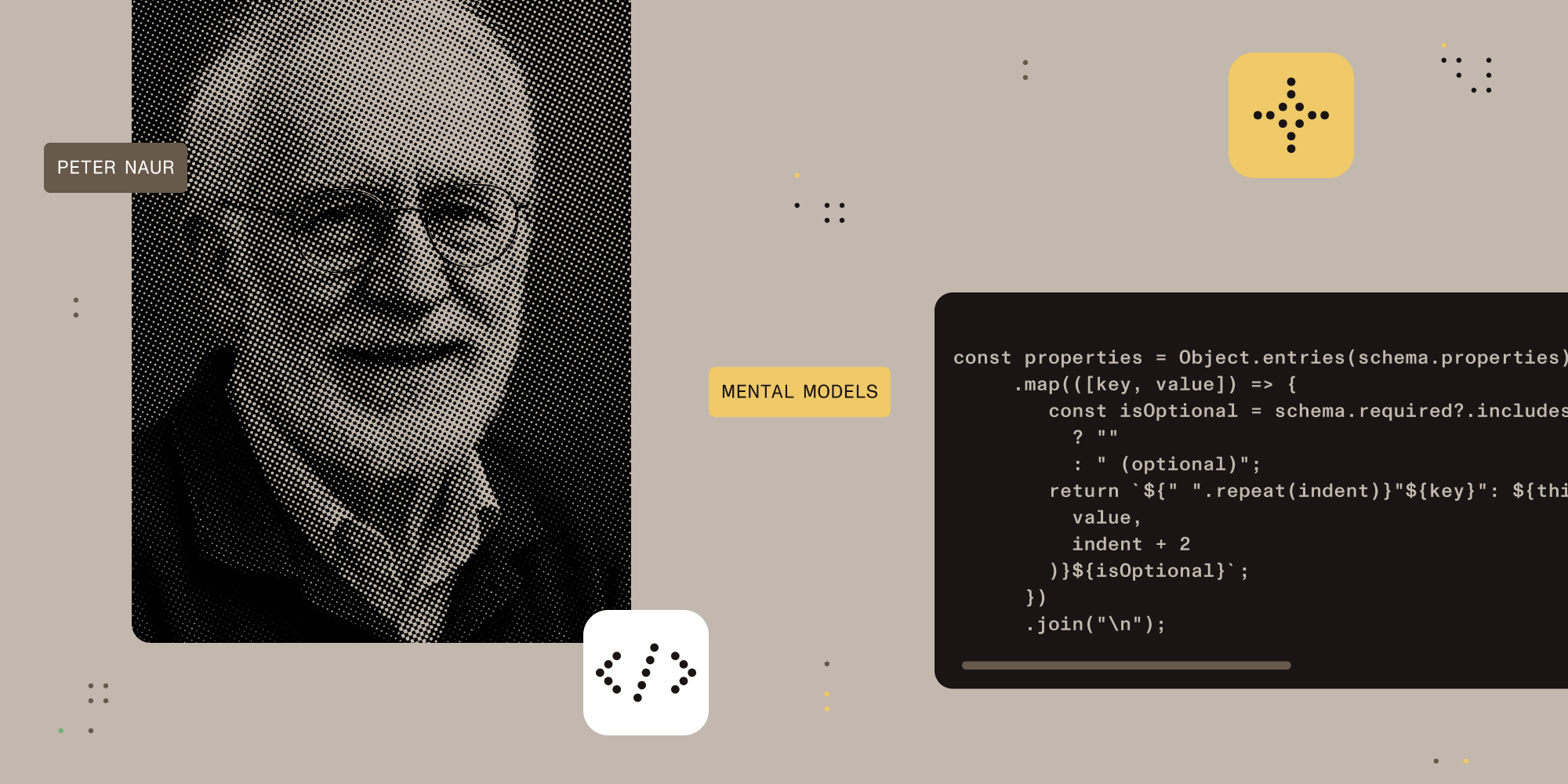

In 1985, computer scientist Peter Naur(opens in a new tab) argued that the essence of programming isn’t in writing code but in building mental models of both problems and their solutions(opens in a new tab). This revolutionary perspective seems almost prophetic in today’s AI-powered development landscape. As coding assistants increasingly suggest, complete, and even generate entire functions for us, Naur’s focus on understanding code deeply rather than just writing it offers valuable guidance for today’s programming world.

What Naur understood about the programmer’s mind

In “Programming as Theory Building,” Naur presented an idea that still resonates today. In his words: “Programming in essence is building a certain kind of theory, a theory of how certain affairs of the world will be handled by a computer program.” He rejected the common view that programming is just about producing code or documentation. Instead, Naur insisted that when we program, we’re actually building detailed mental maps that connect messy real-world problems to software solutions. These mental maps don’t just cover the mechanical aspects of how code runs, but also capture the “why” behind every decision: why this approach over others, why certain tradeoffs made sense, and most importantly, how the program’s structure mirrors the structure of the real-world problems it solves. As Naur put it, a program is “similar to a theory in the manner in which it relates to the real world affairs.”

The most striking aspect of Naur’s perspective is his assertion that these mental models cannot be fully captured in documentation, comments, or even the code itself. As he precisely stated, “an essential part of any program, the theory of it, is something that could not conceivably be expressed, but is inextricably bound to human beings.” When developers with a strong shared understanding collaborate, they can evolve software coherently because they carry the same theory in their minds. But when that mental model is lost, such as when a team disperses without effectively transferring its understanding, the program experiences what Naur termed “the death of a program,” which “happens when the programmer team possessing its theory is dissolved.” At this point, future modifications become increasingly difficult despite the code itself remaining intact. Naur was so convinced of this problem that he argued that “revival of a program” is nearly impossible, suggesting that “the existing program text should be discarded” and the problem solved anew rather than attempting to recover lost understanding. While I disagree with Naur’s drastic solution of complete rewrites, which often create more problems than they solve, his fundamental insight about the importance of shared mental models remains relevant today.

The AI coding assistant revolution

Since Naur articulated his theory-building framework, the programming landscape has transformed dramatically with the emergence of AI coding assistants. Tools like GitHub Copilot(opens in a new tab), Cody(opens in a new tab), and Cursor(opens in a new tab) can now generate entire functions, complete complex code blocks, and even refactor existing implementations with minimal human input. This has given rise to things like “vibe coding(opens in a new tab),” a process where even people with no programming background can create great applications by increasingly relying on intuitive feelings about whether code looks right rather than deeply reasoning through each implementation detail. Some people emphasize the difference between AI-assisted programming and vibe coding(opens in a new tab), and I think it’s an important distinction to make.

However, this revolution introduces a subtle but significant tension with Naur’s vision. While AI tools excel at producing syntactically correct and functionally adequate code, they don’t build mental models; these models pattern-match against training data. Any developer who accepts AI suggestions without thoroughly understanding them risks creating a knowledge gap in their mental model of the system. The code works, passes tests, and satisfies requirements, but the theory behind it — the deep understanding of why it works — might never form in the developer’s mind. This creates exactly the scenario Naur warned about: code that functions correctly but lacks a corresponding complete mental model that would allow for coherent evolution over time.

The hidden costs of outsourcing understanding

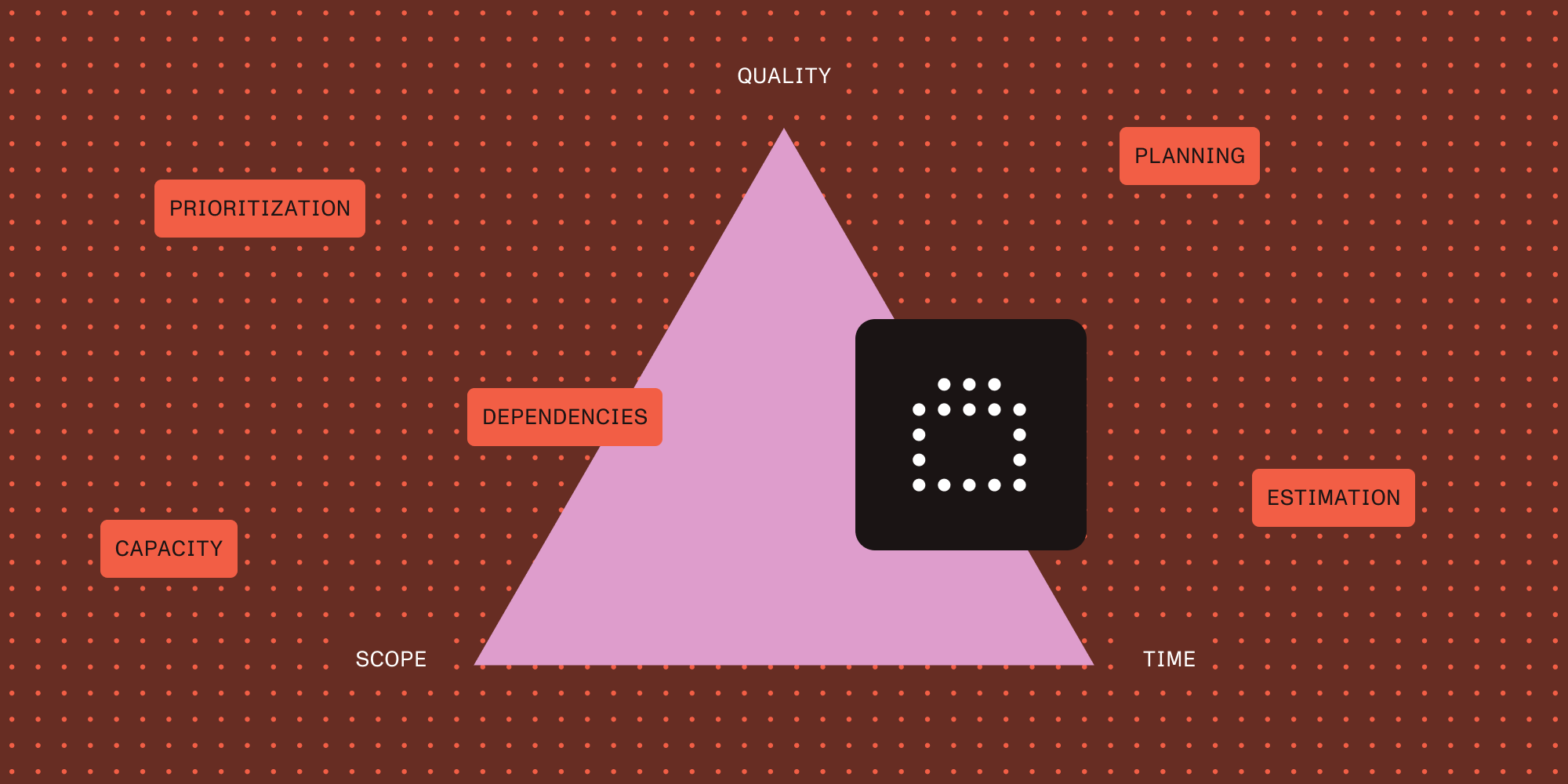

This gap between functioning code and comprehensive mental models brings us to an important problem: What happens when teams increasingly rely on AI-generated code without developing the theory Naur considered essential? As developers accept suggestions from AI, they may skip the cognitive work that traditionally built their mental models. The immediate productivity gains are tangible — for example, tasks that once took hours can be completed in minutes — but the cumulative effect on a team’s shared understanding can have a big impact. We risk creating codebases that function properly, yet no one has the deep understanding Naur identified as critical for sustainable development.

Over time, the codebase risks accumulation of these theory-poor segments, creating what might be called “knowledge debt,” a variation of the more classical technical debt(opens in a new tab) where the issue isn’t just a poor implementation but missing mental models. When requirements change or bugs emerge in these areas, developers may find themselves acting as “archaeologists” of their own codebase, trying to reverse-engineer the theory that should have guided the implementation in the first place. This is precisely the scenario Naur described decades ago, except now we could create it intentionally through our development practices rather than through team turnover.

Using AI while preserving mental models

Despite these risks, Naur’s theory-building perspective doesn’t require us to abandon AI coding tools; it challenges us to use them more thoughtfully. The key is approaching AI suggestions as starting points for understanding rather than endpoints for implementation. When an AI assistant generates a complex algorithm or elegant solution, treat it as an expert’s suggestion that demands comprehension before acceptance. Ask questions like: Why did the AI choose this approach? What alternatives might exist? How does this solution connect to our existing mental model of the system? This questioning process transforms passive code acceptance into active theory building, preserving the cognitive work that Naur recognized as essential.

Teams can follow this approach through modified workflows and culture. Code reviews should explicitly assess understanding, and not just correctness. Reviewers might ask to explain why a solution works, not merely that it does. Pair programming sessions with AI tools can become collaborative theory-building exercises, where developers discuss and question the assistant’s suggestions together. Documentation can evolve to capture not just what code does but the reasoning process behind accepting particular implementations, creating artifacts that support (though never replace) the shared mental model.

Modern AI coding assistants, including our Nutrient Copilot(opens in a new tab) extension, can support this understanding-first approach. By combining code suggestions with access to our documentation, developers can explore both implementation details and the concepts behind them. When writing document-processing code, developers can reference relevant documentation alongside AI suggestions, helping build stronger mental models of the system. This integration of code assistance with documentation access shows how AI tools can complement, rather than replace, the deep understanding needed for long-term development.

Conclusion

Peter Naur’s insights about the importance of mental models give us valuable guidance in this new era of AI-assisted coding. The best programmers of tomorrow won’t be those who only write prompts most effectively, but those who also maintain deep understanding of their code while using AI to handle routine implementation tasks. By approaching AI suggestions thoughtfully, and treating them as opportunities to build knowledge rather than shortcuts around understanding, we can embrace these powerful tools without sacrificing the shared mental models that make software maintainable and adaptable over time. The future of programming isn’t about choosing between human understanding and AI efficiency, but about thoughtfully combining them: using AI to handle the mechanics while we humans focus on what Naur recognized decades ago as our most valuable contribution — building comprehensive mental models of the systems we create.