Our Journey to ARM

Table of contents

In today’s blog post, I’ll talk about our efforts to port our server-based products, PSPDFKit Web Server-Backed and PSPDFKit Processor, to the ARM architecture. I’ll outline our reasoning for supporting it now, how we managed to develop support without access to any ARM-based hardware, and what we learned from it. This won’t necessarily take the shape of a structured how-to guide, rather it’s more of a recollection of events, with some especially relevant bits of code thrown in. So let’s get started.

If you want to learn more about Apple silicon at PSPDFKit, check our our Server Development post

Why We Support ARM

Let’s start with the first question: Why should we even support ARM? Looking at the Stack Overflow 2020 Developer Survey(opens in a new tab), about 27 percent of respondents use macOS. With Apple’s announcement of the DTK in June 2020, and with its transition to ARM for all Macs well on its way, it’s key for anyone who creates developer tools to support this user base. This includes us; while PSPDFKit Web Server-Backed and Processor work on their own, our customers still need to integrate them with their apps, so it’s important that they can run on the platforms their developers actually use for their day-to-day work.

The second and perhaps more immediate benefit comes when it’s actually time to deploy our products. Amazon launched ARM-based AWS Graviton instances(opens in a new tab) all the way back in 2018. The company has since continued to refine and improve the performance of these instances, and (at the time of writing this blog post) they offer the same performance as the x86_64-based counterparts, but at a lower price. We’ll come back to the performance in a bit, but that means that if we can offer ARM support, it’ll allow our customers to save money when deploying our software.

With our reasons for the why out of the way, let’s look at how we actually did it.

How We Made It Run

1. Shared Core

There’s one piece of software that underpins all of our products — from our iOS and Android SDKs, all the way to PSPDFKit Web Server-Backed — and that is our shared core. We do have an advantage here since, due to our heritage of mobile SDKs, we can already run on ARM CPUs. That being said, it wasn’t as easy as calling make on an ARM machine and being done with it. Since most of our CI still runs on x86_64-based machines, we decided that cross-compiling was the way to go. We made this decision since the cloud provider we use for our CI infrastructure at this time only provides x86_64 machines. And while, in the context of AWS, ARM machines are cheaper, x86_64 machines made more sense here since we already had them. For cross-compiling, we had to set up a CMake toolchain(opens in a new tab) that instructs our build to produce an ARM64 binary and link against ARM64 libraries. If you want to know more specifics about this, be sure to let us know(opens in a new tab), and we can write about it in more detail.

With this part done, let’s look at our Docker images.

2. Docker Images

If you’re familiar with PSPDFKit Web Server-Backed or Processor, you’ll know that we ship them as Docker images, ready for you to run on your infrastructure or development machine. Now, Docker supports building ARM64 images(opens in a new tab) on an x86_64 machine using QEMU under the hood. Sadly, the overhead of emulating a different architecture makes this incredibly slow for everything but the simplest images. Just to give you an idea of the slowdown, building our Web Server-Backed release image normally takes about three minutes. The same image built for ARM in QEMU takes about 10 minutes on the same machine. This wasn’t very effective for development, nor would it be feasible for our eventual CI deployment, since quick turnaround times are essential.

AWS to the Rescue

Luckily for us, Docker Buildx has great support for remote builder instances(opens in a new tab). Assuming you have a sufficiently fast connection, you can connect to a remote host that’s running on native ARM hardware and use that for building your Docker images. All you need set up is SSH access to the remote host, with Docker also installed there.

Let’s look at a short example. If you already have a docker-compose.yml file with an app service, using docker-compose, you’d usually build it like this:

docker-compose build appNow if you wanted to use a remote builder, the equivalent would look like this:

docker buildx create --name arm-machine ssh://user@hostdocker buildx bake --builder arm-machine --load baseThis is what we did. And from there, we could work on making our Docker image actually build on ARM. Keep in mind that for our CI setup, we still use docker-compose for building; we only used Buildx locally since it made working with remote builders much easier.

This part was actually surprisingly easy. Our images are based on Debian, and most of our dependencies are installed directly from the main APT repository, so they required no additional work to support our ARM image. A few dependencies we build from source, and again, those all already supported the ARM architecture, so no additional work was required. A handful of our dependencies are prebuilt binaries we pull; for those, we had to adjust our Dockerfile to account for the target platform when determining the download URL. This takes the shape of a simple if in the RUN block:

RUN if [ "$TARGETPLATFORM" = "linux/arm64" ]; then \ export NODE_PLATFORM="arm64";\ else \ export NODE_PLATFORM="x64"; \ fi && \ curl -sL "https://nodejs.org/dist/v${NODEJS_VERSION}/node-v${NODEJS_VERSION}-linux-${NODE_PLATFORM}.tar.gz"Apart from this, only some minor details needed adjusting, like some version differences between what is available on ARM and what is available on x86_64.

Performance Testing

One thing we wanted to make sure of before releasing PSPDFKit Web Server-Backed and Processor for ARM is that the performance shouldn’t be noticeably worse than that of our x86_64 build. So we ran the same load testing scenario we use to test our x86_64 builds against our new ARM build. Our test essentially simulates a constant stream of users viewing PDFs: We measure the average time it takes until the first page is rendered, along with how many users encounter errors due to the server being too overloaded to respond in time. For these tests, we always pick a load that’s slightly too much to handle for the given hardware to simulate a worst-case scenario.

When running the same load against an x86_64-based c5a.large instance and an ARM64-based c6g.large EC2 instance, we found that they perform essentially identically. Given that the Graviton-based instances are cheaper, we confirmed there’s a clear benefit for our customers in shipping the ARM version. This meant we were good to go ahead and make ARM a first-class citizen.

CI Integration

Once we established we wanted to move ahead, it was time to integrate ARM into our CI infrastructure. As you can read about elsewhere on our blog, here at PSPDFKit, we use Buildkite. What makes Buildkite so great is that it runs on infrastructure you manage. That means there’s no need to wait around for the CI provider of your choice to add ARM machines; with Buildkite we can use AWS(opens in a new tab) to add additional agents that run on ARM processors to our fleet.

All our CI jobs run in Docker, so to get ARM images built and tested, all we had to do was to run the existing steps of our CI pipeline on our new ARM machines. Surprisingly, it ended up being easy. We only had a few test dependencies in our whole stack that didn’t yet support ARM: most notably jest-screenshot(opens in a new tab), which relies on native code to perform image comparisons. Since the native part wasn’t yet updated to support ARM, we had to replace it with a pure JS implementation provided by jest-image-snapshot(opens in a new tab).

The key lessons you can take away from this are:

- Dockerizing your CI is awesome. Because many parts of our CI and testing infrastructure rely on well-maintained images such as the node Docker image(opens in a new tab), we essentially got ARM support for free.

- Carefully consider if you really need that dependency with native code. While the promise of jest-screenshot(opens in a new tab) being “around 10x faster than jest-image-snapshot” sounds great on paper and most likely is true, we probably lost more time than this saved by having to replace it with something that does support ARM.

Finally let’s look at the last thing we had to do.

Managing the Release

PSPDFKit Web Server-Backed and Processor are shipped to our customers as Docker images that are hosted on Docker Hub(opens in a new tab). Let me first give you a general outline of how our release process looks:

- We build release-ready Docker images for Web Server-Backed and Processor.

- CI runs a full test suite against our newest release.

- If everything is green, CI will upload the Docker image to our internal release management service. This is where we manage all our releases, store build artifacts, and ultimately push the deploy button that pushes to Docker Hub.

Most of this release process isn’t affected by our new ARM images; we already addressed the CI tests and building Docker images in the previous sections, so the only thing left to handle is our step where we push to Docker Hub.

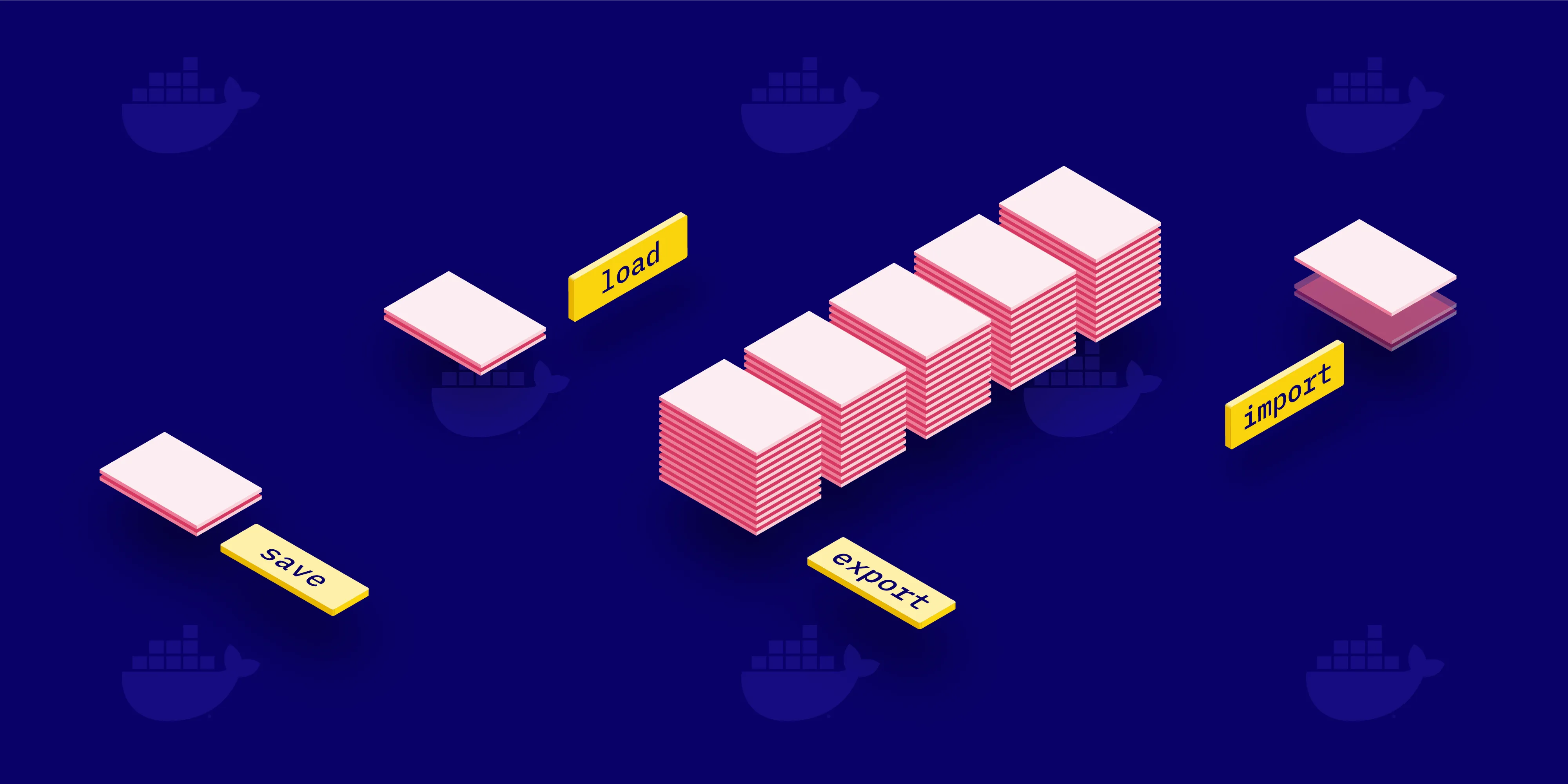

Before this, it was straightforward:

- We uploaded a tarred and gzipped Docker image to our internal release management service.

- Once we were ready, we hit the deploy button.

- We loaded the Docker image into the local Docker daemon and pushed it to Docker Hub.

Now it’s slightly more complicated. CI produces multiple images — one for each architecture. Since only our internal release management service has access to the credentials to Docker Hub, all the hard parts of assembling a multi-architecture image need to be handled there. So let’s take a look at that.

Assembling a Multi-Architecture Image

The magic ingredient here is the Docker manifest list(opens in a new tab). This is where everything ultimately comes together. Let’s have a look at the manifest list of one of our multi-architecture builds:

{ "schemaVersion": 2, "mediaType": "application/vnd.docker.distribution.manifest.list.v2+json", "manifests": [ { "mediaType": "application/vnd.docker.distribution.manifest.v2+json", "size": 6813, "digest": "sha256:a65a1b57f0d2d06806a6ddbcc94dd4f4ae98b9f3c23a11233930d682ac52d438", "platform": { "architecture": "arm64", "os": "linux" } }, { "mediaType": "application/vnd.docker.distribution.manifest.v2+json", "size": 7871, "digest": "sha256:50c0c938a81df5ef7ab523ba4eed09ede52e4690f36a7db0cc4ad874b80dfc06", "platform": { "architecture": "amd64", "os": "linux" } } ]}To quote the docs:

The manifest list is the “fat manifest” which points to specific image manifests for one or more platforms. ... A client will distinguish a manifest list from an image manifest based on the Content-Type returned in the HTTP response.

Essentially what will happen when a client now pulls pspdfkit/pspdfkit:2020.3 from Docker Hub is they’ll receive a manifest list similar to the one shown above. Then, based on the architecture of their underlying hardware, they’ll either pull our arm64 or x86_64 image. This means that, for our customers, nothing will change, and it even means that, for example, running this on Apple Silicon now just works, as it’ll automatically pull the arm64 image.

You might be wondering how to create a manifest list. It’s quite simple using the docker manifest(opens in a new tab) command. You can create a manifest list specifying all the separate images that will make up your final multi-architecture image:

docker manifest create pspdfkit/pspdfkit:2020.3 \ --amend local-registry/pspdfkit:2020.3-x86_64 \ --amend local-registry/pspdfkit:2020.3-aarch64Then you can push the manifest list and all referenced images using a single command:

docker manifest push -p pspdfkit/pspdfkit:2020.3Something we didn’t realize initially that’s worth pointing out is that there’s no need to separately push all the images built for different architectures: As you push the manifest list, these images are also uploaded to your Docker registry.

And with that, we walked through our whole journey — from the idea of shipping an ARM version of PSPDFKit Web Server-Backed and Processor to actually having done it.

Conclusion

This is a bit of a different style of article: It’s less focused on specific implementation details and it’s more of a bigger picture look at what it took to get there across all of the steps — from inception to shipping the final product. Still, I hope this gave you insight into how we do things here and motivated you to add ARM support to your own product. For more information on this topic, check out our follow-up post, which discusses our internal developer perspective of using Apple Silicon for working on our Server products.