Building trust in enterprise AI: A deep dive into security and privacy for document intelligence on Azure Government and AWS Bedrock

Table of contents

At a glance

AI brings speed and new insight to document workflows — from editing and comparison, to redaction and data extraction. But its adoption is slowed by real business fears:

- 42 percent of professionals cite lack of demonstrable security as the main barrier to AI investment(opens in a new tab).

- 37 percent point to broader ethical concerns(opens in a new tab).

- Risks range from confidential data leakage and prompt injection attacks to GDPR exposure and copyright disputes.

This paper shows how deploying Nutrient’s AI services on Azure Government or AWS Bedrock on GovCloud addresses those concerns head-on. Both platforms provide sovereign-grade environments with strict isolation, compliance certifications, and explicit contractual safeguards that ensure customer data is never used to train public foundation models.

Key takeaways

- Concrete protections — Azure Government offers physical and logical isolation, U.S.-only cleared operators, and confidential computing to protect data, even in use. AWS Bedrock on GovCloud provides FedRAMP High authorization, secure enclaves, and guardrails for content and PII filtering.

- Proven governance — Applying the NIST AI Risk Management Framework(opens in a new tab) validates a structured approach to identifying, measuring, and managing AI-specific risks.

- Customer-first contracts — Both Microsoft and AWS guarantee that prompts and outputs remain private — removing one of the biggest barriers to enterprise AI.

By leveraging these safeguards, organizations can confidently deploy Nutrient’s AI document assistants and workflows in even the most sensitive environments. The conversation shifts from risk avoidance to secure innovation.

1 — Deconstructing the AI risk landscape for document-centric workflows

Trust begins with a clear view of the risks. For enterprises processing contracts, financial reports, medical records, or discovery materials, AI doesn’t just extend existing concerns — it introduces new ones across privacy, security, compliance, and intellectual property. These risks are real, not hypothetical, and they explain why many organizations hesitate to deploy AI in sensitive document workflows.

1.1 — Anatomy of AI-specific threats: Data privacy, security, and compliance

AI systems thrive on large datasets, and this reliance magnifies long-standing challenges. Sensitive documents often include PII, PHI, or proprietary data such as trade secrets and financial results. A single vulnerability can lead to regulatory penalties, lawsuits, and reputational harm.

One fear is unauthorized repurposing of personal data — for example, when resumes or medical records collected for a specific purpose are reused to train models without consent. Such practices collide with laws like GDPR and undermine public trust. Even when direct misuse is avoided, advanced models can infer sensitive attributes (political leanings, health conditions) from seemingly harmless data.

The attack surface for large language models (LLMs) continues to expand. Prompt injection attacks can induce models to bypass safeguards, expose confidential information, or forward sensitive documents, as documented by GDPRLocal(opens in a new tab) and IBM(opens in a new tab). Because LLMs themselves contain vast amounts of embedded data, they become valuable targets for exfiltration, according to Frost Brown Todd LLP(opens in a new tab) and IBM(opens in a new tab). And the machine learning stack is sprawling: Popular frameworks rely on hundreds of thousands of lines of code and hundreds of dependencies, each a potential entry point(opens in a new tab).

A particularly dangerous vulnerability is data leakage:

- Training-time leakage(opens in a new tab) occurs when models memorize unique examples, later reproducing contracts, emails, or medical records verbatim.

- Inference-time leakage(opens in a new tab) happens when attackers probe the model with crafted prompts to extract sensitive data from training or context.

Both scenarios can trigger GDPR or HIPAA violations and lead to multimillion-dollar liabilities.

Finally, compliance challenges are mounting. GDPR’s “right to be forgotten” is especially problematic: Once personal data is baked into a model, deleting it without full retraining is nearly impossible. Requests for deletion are rising sharply — up 43 percent year over year — leaving companies stuck between legal requirements and technical limitations(opens in a new tab). GDPR also mandates Data Protection Impact Assessments (DPIAs), but opaque model behavior makes it difficult to produce assessments regulators will accept.

1.2 — The “black box” problem: Accuracy, bias, and hallucinations

Even when data is secure, the quality and transparency of AI outputs remain pressing concerns. Half of professionals cite(opens in a new tab) lack of demonstrable accuracy as a barrier to AI adoption. The issue is not poor grammar or style — it’s hallucinations: confident, polished answers that are factually wrong. In document-heavy fields like law, finance, or medicine, a fabricated clause or figure can do real damage.

Bias compounds the problem. If training data is skewed, models can produce discriminatory results — in hiring, lending, or legal contexts — exposing organizations to ethical and legal challenges.

Then there’s explainability. Many advanced models function as black boxes, making it nearly impossible to trace why a given output occurred. Regulators in finance and healthcare often demand clear, auditable reasoning, but most LLMs cannot provide it(opens in a new tab). Without transparency, accountability and governance suffer.

The rise of shadow AI makes this worse: Employees adopt unsanctioned public tools out of convenience, pasting sensitive company data into systems outside IT’s control. A sanctioned, secure internal solution isn’t just about enabling new capabilities — it’s about shutting down uncontrolled risk.

1.3 — The data training dilemma: Copyright and confidentiality

The provenance of training data is one of AI’s most contentious issues. Many leading models have been built by scraping content from the internet without permission or compensation(opens in a new tab), and courts are now testing whether this constitutes copyright infringement. One recent ruling found that training on copyrighted legal materials was not “fair use,”(opens in a new tab) since the use was commercial and non-transformative, and it directly harmed the original market.

For businesses, the downstream risk is clear: If you rely on AI tools trained on questionable data, you may face copyright liability, even if your own use is legitimate.

Confidentiality adds another layer. Employees and professionals are bound by NDAs and client agreements, but the simple act of pasting a contract clause or financial detail into a public AI tool may constitute a breach. If that information leaks — or worse, shows up in another user’s response — the fallout could include lawsuits, lost clients, and reputational harm.

For organizations like Nutrient’s clients, this underscores why sovereign AI platforms matter: Data must remain private, never be reused for training, and always be protected within compliant, isolated environments.

2 — Azure Government: Architecting a sovereign fortress for sensitive data

For organizations handling highly regulated or mission-critical documents, infrastructure choice isn’t just about performance — it’s about trust. Microsoft Azure Government isn’t a repackaged version of commercial Azure, but a sovereign cloud environment designed specifically for the stringent needs of the U.S. government and its partners. Its architecture — physical, logical, operational, and technological — directly addresses the AI risks outlined in the previous section.

2.1 — The sovereign cloud advantage: Physical, logical, and personnel controls

The strength of Azure Government begins with isolation.

- Physical and logical separation — Data centers and networks are located exclusively within the continental U.S.(opens in a new tab). Azure Government is a distinct cloud, legally and technically separate from commercial Azure, and available only to eligible U.S. government entities and partners(opens in a new tab). This prevents colocation of sensitive data with commercial or foreign tenants, raising the baseline for compliance and security.

- Personnel screening and operations — All operations are performed by screened U.S. citizens(opens in a new tab) who undergo rigorous background checks. This aligns with requirements(opens in a new tab) such as the FBI CJIS Security Policy, which mandates fingerprint-based checks for anyone with access to unencrypted justice data. Unlike commercial environments with globally distributed support staff, Azure Government enforces human-level security(opens in a new tab) as part of its chain of trust.

Together, the controls of technology, process, and people interlock to form a holistic perimeter of defense.

2.2 A multi-layered defense for AI workloads

Azure Government inherits Azure’s defense-in-depth model, enhanced with controls for sovereign environments.

- Identity and access — It’s managed through Microsoft Entra ID, with network protections such as Network Security Groups (NSGs) and Azure Firewall. Microsoft Sentinel adds advanced threat detection and response(opens in a new tab).

- Encryption at rest and in transit — All customer data is encrypted by default(opens in a new tab). With customer-managed keys (CMKs), Nutrient can generate and store its own keys in FIPS 140-validated HSMs via Azure Key Vault(opens in a new tab). This ensures even Microsoft cannot decrypt sensitive documents or fine-tuned models.

- Private connectivity — ExpressRoute provides(opens in a new tab) dedicated, private links between on-premises networks and Azure Government. Private Link further ensures that AI services like Azure OpenAI can be accessed only via private endpoints, isolating all traffic from the public internet(opens in a new tab).

2.3 — Protecting data-in-use: Confidential computing

Encryption at rest and in transit is now expected. Azure pushes further by securing data while in memory using the following methods and tools.

- Trusted Execution Environments (TEEs) — Specialized CPUs create isolated, encrypted enclaves where data remains inaccessible(opens in a new tab) even to host the OS, hypervisor, or administrators.

- Verifiable attestation — With Azure Attestation, clients can cryptographically verify that a TEE is authentic(opens in a new tab), is running the intended code, and hasn’t been tampered with before any sensitive workload is decrypted.

For Nutrient’s AI workflows, this means processes such as redaction or extraction can execute inside confidential VMs or containers. Sensitive documents remain encrypted end-to-end — even during model inference — eliminating one of the most persistent cloud vulnerabilities.

2.4 — The zero-training guarantee

One of the most persistent fears is that customer data will be used to train public models. Microsoft addresses this with explicit contractual and architectural separation:

- Prompts, completions, embeddings, and fine-tuning data aren’t(opens in a new tab) shared(opens in a new tab) with other customers, OpenAI, or Microsoft’s own foundation models.

- Fine-tuned models created by Nutrient remain Nutrient’s exclusive property.

- Combined with CMK, clients retain full sovereignty(opens in a new tab); deleting a key renders all stored data, models, and backups indecipherable — a cryptographic kill switch.

Abuse monitoring does exist(opens in a new tab): Algorithms may flag potential misuse, and in rare cases, authorized reviewers examine samples. However, these samples are stored separately and are never used for training, and access is tightly logged. In Azure Government, monitoring is further restricted(opens in a new tab), giving customers more direct responsibility for safe use.

2.5 — Verifiable compliance portfolio

Azure Government pairs technical controls with extensive certifications:

- FedRAMP High and DoD IL4/5/6 authorizations validate readiness for sensitive and classified workloads.

- CJIS compliance ensures suitability for criminal justice data, with personnel screening requirements built in.

- ITAR/EAR support provides controls for export-restricted defense data, including U.S. residency and FIPS 140 encryption.

No other commercial cloud offers this breadth of government-grade certifications.

2.6 — Shared responsibility in practice for Nutrient

Azure Government secures the infrastructure; Nutrient secures its workloads.

- Microsoft’s responsibility(opens in a new tab)

- Physical data centers, networks, hosts, and PaaS security.

- Nutrient’s responsibility

- Data governance and classification

- Role-based identity and access management

- Application security: safe API handling and input validation

- Network configuration: isolating workloads through private endpoints and virtual networks

This model isn’t a burden but a form of empowerment: Nutrient can tailor its security posture while relying on Microsoft’s sovereign foundation. Together, they create a defensible architecture for AI-driven document intelligence.

3 — AWS Bedrock on GovCloud: Secure, compliant, and controlled generative AI

Amazon Web Services (AWS) delivers generative AI through a combination of AWS GovCloud (US) — an isolated cloud environment for regulated workloads — and Amazon Bedrock, a managed service for foundation models. Together, they provide the controls, policies, and compliance credentials required by U.S. government agencies and highly regulated industries.

3.1 — GovCloud (US): A secure enclave for regulated industries

Like Azure Government, AWS GovCloud (US) is designed from the ground up for sovereignty.

- Isolation and sovereignty — Physically and logically separate regions (US-East and US-West) ensure data(opens in a new tab) never leaves U.S. soil(opens in a new tab). The infrastructure is staffed only by screened U.S. citizens, and access is restricted to vetted U.S. entities.

- Operational consistency — GovCloud mirrors commercial AWS services and APIs, giving developers continuity. But advanced services like Amazon Bedrock require a request-and-approval process(opens in a new tab) adding an administrative checkpoint and accountability for activation.

This model combines the familiarity of AWS with the heightened oversight required by government standards.

3.2 — Built-in safeguards: Amazon Bedrock Guardrails

Bedrock extends beyond infrastructure security with application-layer safeguards — an essential response to the risks unique to generative AI.

- Content filtering and denied topics — There are adjustable(opens in a new tab) filters(opens in a new tab) for hate speech, violence, sexual content, and more, plus configurable “denied topics” to keep assistants on task.

- PII filtering — Automatic detection of common PII types, plus custom regex-based filters for proprietary patterns ensure detected data can be blocked or masked(opens in a new tab) with placeholders (e.g. [NAME-1]).

- Grounding checks — Models can be instructed to answer only when evidence exists in a defined knowledge base, reducing hallucinations and enforcing faithfulness(opens in a new tab) to source material.

- IAM enforcement — Using the

bedrock:GuardrailIdentifiercondition key, organizations can enforce mandatory guardrails(opens in a new tab) for every inference call, centralizing governance.

For Nutrient’s AI document redaction, comparison, and data extraction tools, guardrails provide an automated layer of real-time safety and compliance.

3.3 — Data sovereignty with private connectivity

AWS eliminates exposure to the public internet through VPC endpoints and Private Link(opens in a new tab).

- VPC endpoints — Nutrient can connect applications directly to Bedrock inside its own VPC. All traffic flows over AWS’s private backbone, never touching the public internet.

- Secure fine-tuning — Training data stored in encrypted S3 buckets is accessed only via private VPC interfaces, with KMS providing customer-managed keys. Model fine-tuning and artifact generation remain fully contained within this private, encrypted environment.

This design ensures end-to-end protection for document processing, from upload through inference and model customization.

3.4 — Guarding against data misuse

AWS reinforces its position with clear contractual and technical guarantees(opens in a new tab):

- No training on customer data — Prompts, completions, and fine-tuning data aren’t used to improve base models.

- Private model copies — Fine-tuned models are isolated, customer-owned instances of foundation models. Providers cannot access the service accounts or logs that store customer interactions.

This creates a one-way data street: Customer data can be used for inference, but it never flows back to enrich shared or public models.

3.5 — Compliance: FedRAMP and DoD authorizations

AWS GovCloud services, including Amazon Bedrock, are backed by rigorous third-party audits:

- FedRAMP High — Bedrock has achieved authorization(opens in a new tab) to handle highly sensitive but unclassified government data.

- DoD IL4/5 — As of June 2025, AWS became the first provider(opens in a new tab) to win IL4 and IL5 approval for specific Bedrock models, enabling use with Controlled Unclassified Information (CUI) and other defense workloads.

A list of approved models is outlined below.

| Model provider | Model name | FedRAMP High | DoD IL4/5 |

|---|---|---|---|

| Amazon | Titan models | Authorized | Authorized |

| Anthropic | Claude 3.5 Sonnet v1 | Authorized | Authorized |

| Anthropic | Claude 3 Haiku | Authorized | Authorized |

| Meta | Llama 3 8B | Authorized | Authorized |

| Meta | Llama 3 70B | Authorized | Authorized |

For Nutrient clients, this table is tangible evidence: AI workloads can be mapped to specific models with the compliance credentials required for their sector.

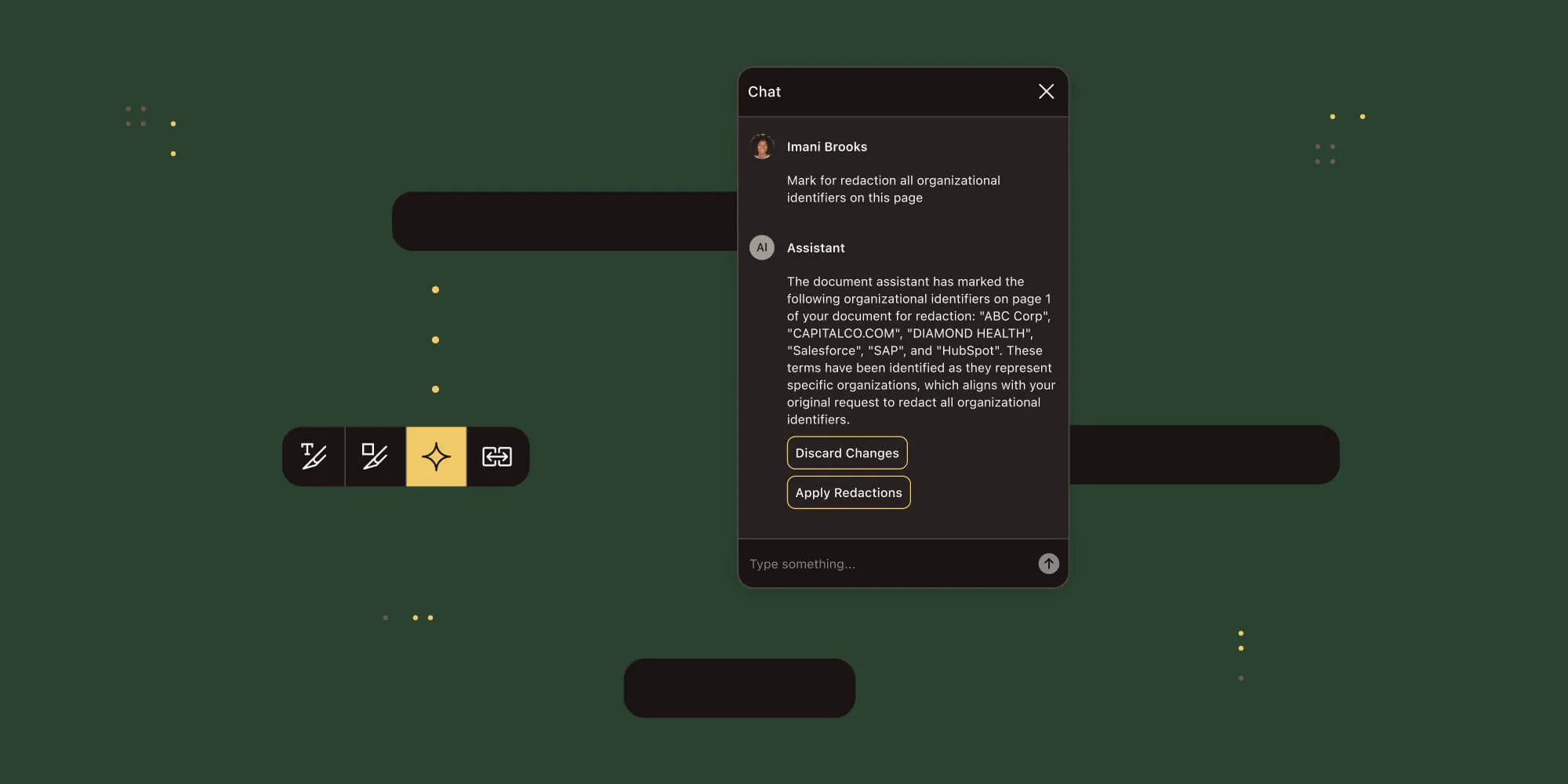

4 — Practical application: Securing Nutrient’s AI document services

Azure Government and AWS Bedrock on GovCloud are only as valuable as the protections they deliver in real workflows. This section turns platform capabilities into concrete safeguards for Nutrient’s AI assistants, comparison, redaction, and data-extraction features — and shows how to evidence those safeguards to your users.

4.1 — Mapping Nutrient’s services to cloud security controls

Nutrient delivers several AI-powered document capabilities — assistance, extraction, redaction, and comparison — each with a different mix of operational value and risk exposure. To make these features defensible in regulated environments, security must be mapped to the point where the risk arises, not just applied generically at the cloud perimeter. This section details how Azure Government and AWS Bedrock on GovCloud controls align with each capability, showing how risks such as data leakage, prompt injection, or missed redactions are addressed in practice.

AI document assistant and data extraction

These features often mix public and confidential content.

Risk

- An employee asks about a sensitive contract; the model leaks PII or a proprietary clause. Content is later reused to train a public model.

Mitigation

- AWS Bedrock — Configure guardrails to detect and mask PII in prompts and outputs; apply contextual grounding checks so summaries stick to the source.

- Azure Government — Run the end-to-end flow inside a confidential VM so documents stay encrypted in memory during processing.

- Both — Rely on explicit no-training commitments so prompts/outputs/fine-tuning data aren’t used to improve public models.

AI document redaction

High-stakes handling of sensitive fields (PII/PHI).

Risk

- Missed entities (e.g. a social security number) lead to a breach.

Mitigation

- Azure Government — Process unredacted documents inside TEEs; sensitive data never appears in host memory in clear text.

- AWS Bedrock — Use layered controls, like GovCloud isolation, VPC endpoints, and KMS customer-managed keys, even without a direct TEE equivalent for this workload.

AI document comparison

Legal/financial diffs where tiny discrepancies matter.

Risk

- Confidential documents traverse the public internet or touch third-party systems.

Mitigation

- Both — Use ExpressRoute/Private Link (Azure) or PrivateLink + VPC endpoints (AWS) so traffic never hits the public internet.

- Both — Enforce access with granular IAM, and rely on no-training guarantees to prevent secondary use of content.

Risk-mitigation matrix

| Client fear/AI risk | Mitigation via Azure Government | Mitigation via AWS Bedrock on GovCloud |

|---|---|---|

| Confidential data will be used to train public AI models. | Explicit, contractual zero-training for Azure OpenAI; fine-tuned models remain private. | Explicit no-training policy; fine-tuning creates a private copy of the base model. |

| Prompt injection will leak sensitive information. | Azure AI Content Safety filters; processing inside confidential Computing prevents host-level exfiltration. | Guardrails with denied topics/word filters; IAM enforces mandatory guardrails per call. |

| Hallucinations will produce inaccurate outputs. | Ground Azure OpenAI with customer data via Azure AI Search for higher factuality. | Contextual grounding checks ensure answers tie to provided sources. |

| PII or sensitive data will be exposed. | Confidential VMs encrypt data in use; CMK keeps decryption under client control. | Sensitive information filtering automatically blocks or masks PII in real time. |

| We’ll violate GDPR, HIPAA, or CJIS. | FedRAMP High, DoD IL5/6, HIPAA BAA; contractual CJIS commitments. | FedRAMP High; HIPAA eligible; specific Bedrock models authorized at DoD IL4/5. |

| Cloud admins can access our data. | Confidential computing blocks access outside the enclave — even for privileged admins; U.S.-screened personnel only. | GovCloud run by screened U.S. citizens; architectural isolation from model providers and Bedrock team. |

4.2 — Comparative analysis: Strategic platform selection for document processing

There isn’t a single “most secure” choice; there’s better fit per threat model and operational reality. The emphasis differs:

- Azure Government leans into sovereign isolation and hardware-level protections. Confidential computing\ counters sophisticated threats (e.g. hypervisor compromise, privileged insider risk). For national security, intelligence, or finance clients who prioritize mathematical guarantees that raw content is shielded even during processing, this is compelling.

- AWS Bedrock on GovCloud excels at application-layer, content-aware controls. Guardrails mitigate common, high-frequency risks — misuse by authorized users, harmful content, accidental PII leakage — with precise, real-time policy enforcement. For healthcare, public agencies, or any team focused on output behavior and safety, this is a strong match.

Operational model comparison

| Feature/control | Azure Government details | AWS GovCloud (U.S.) details |

|---|---|---|

| Isolation model | Sovereign cloud: physically/logically separate instance of Azure | Isolated regions within the AWS ecosystem |

| Data center locations | U.S. regions include DoD and secret regions | U.S. regions (US-East, US-West) |

| Personnel screening | Operated by screened U.S. citizens | Operated by screened U.S. citizens on U.S. soil |

| Access eligibility | U.S. government entities and vetted partners | U.S. agencies and vetted organizations in regulated industries |

| Key compliance | FedRAMP High, DoD IL2/4/5/6, CJIS (contractual), ITAR, HIPAA BAA | FedRAMP High, DoD IL2/4/5, CJIS, ITAR, HIPAA eligible |

| Data-in-use protection | Confidential computing (TEEs) encrypts memory during processing | Standard AWS controls; no direct general-purpose TEE equivalent |

| AI content safety | Azure AI Content Safety integration | Bedrock Guardrails (content, PII, topics) |

4.3 — Implementation blueprint: How Nutrient proves security

Assurances land when they’re verifiable. Under the shared-responsibility model, Nutrient should turn configuration into evidence.

Configuration and auditing

- Enforce least-privilege IAM, strict network policies (VPCs, private endpoints), and CMK hygiene.

- Use Azure Policy and AWS CloudTrail to codify and continuously audit controls; produce an immutable activity log for every environment action.

Client-facing evidence

- Security architecture diagram — Show private networking, encryption (at rest/in transit/in use), IAM boundaries, and model-serving paths.

- Inherited compliance documents — Provide relevant FedRAMP package excerpts and attestations from Azure/AWS.

- NIST AI RMF alignment — Share governance policies, risk maps, test metrics (e.g. redaction false-negative rate, extraction accuracy), and monitoring results tied to the second section.

Shifting from “trust us” to “verify our security” reduces friction in procurement, accelerates approvals, and builds durable partnerships.

Conclusion: Partnering for a secure AI future

Generative AI can transform document-heavy work — editing, comparison, redaction, and extraction — with real gains in speed and accuracy. The hesitation you hear from boards and CISOs is rational: privacy breaches, opaque decision-making, and regulatory exposure are non-trivial. This paper shows those risks are manageable with the right architecture and governance.

Moving from consumer-grade tools to sovereign-grade platforms — Azure Government and AWS Bedrock on GovCloud — changes the equation. Physical and logical isolation, screened U.S. personnel, FedRAMP High and DoD authorizations, and contractual no-training policies establish a trustworthy baseline. From there, Azure Confidential Computing secures data even in use, while Bedrock Guardrails governs content and PII at the application layer in real time.

Layer in Nutrient’s NIST AI RMF-aligned program — clear governance, mapped risks, measured performance, and managed responses — and you move past reassurances to proof. The outcome: Clients get the benefits of AI without compromising what matters most — their data, their reputation, and the trust of their customers.

Secure AI won’t be won by raw model horsepower alone. It’ll be won by verifiable security and operational discipline. That’s the path Nutrient offers — powered by sovereign clouds, grounded in governance, and built for regulated work.